Blog URL at hrishikamath.com

Hello there, thanks for visiting this page. My blog has moved to hrishikamath.com. Won't put effort into even making this page look nice for you :P.

Personal data is a personal valuable asset , it could be used for economic , social or even malicious benifits. Most internet companies survive on personal data of users in exchange of services. Usually this leads to distributing data to third party services such as advertisers which on a personal level could be a privacy violation. Data is ensured to be distributed with anonymization where identifying attributes are removed. But , this still does not ensure data is anonymous. The increasing available data online gives surface to linkage attacks which involves reidentifying a user from the dataset with common attributes across datasets. One such popular attack is the Netflix attack. Usually third party services such as advertisers don't need personal identifying information , but just enough statistical representation of information. This motivates analysis which reflects the global dataset population , but does not capture any personally identifying information.

Differential Privacy (DP) is one take on privacy which ensures that no single individual is affected for being a part of the dataset or data collection process. It not only protects the individuals from linkage attacks but also provides a plausible way of quanitifying privacy loss.

DP is based on Fundamental Law of Information Recovery which states that "overly accurate answers to too many questions will destroy privacy in a spectacular way".The above equation is the foundation of Differential privacy. Consider two databases one database without a given row of data given by D1 and the other with the given data given by D2. How likely would the output of a certain analysis (or mechanism) differ if the datapoint is included is quantified by a epsilon. This implies probability of output of a certain mechanism with the person's data in the database is likely to be the same or lesser by an factor of 1/exp(epsilon).

We charecterize this by DP(The above charecterization is (epislon,delta)

The delta term gives the tail bound of probability distribution.

To make it more intuitive as to why we quantify privacy this way,let us take an small example. Say a small town of 100 people has a total income of 200 million. If the next time you calculate this number and it ends up reducing to 180 million because there are 99 people. Using auxillary data sources you could find out who left the town from which isn't hard to infer that their income was 20 million. This could be discouraging for participants to participate in such statistics as it are leaks such sensitive information. These are known as differencing attacks.

In simple words we could say that a user's privacy is violated if more information is learnt about a given user when the analysis is performed. If a general inference is made about the population and the same applies to the specific user the users privacy is not violated.

Data collection and analysis must be limited to representing statistics of entire population but in a way that individuals confidentiality is maintained.

You can get complete privacy by absolutely outputting junk or get complete utility for a certain task by outputting raw data. So there is a tradeoff between the two. Differential Privacy is not a direct solution to privacy but a mathematical theory to quantify and guide development of private algorithms. Like Asymptotic analysis by itself is not an algorithm by itself but a theory to guide development of algorithms. The reason why it is important to quantify utility is that the adversery interacts with the database in the same manner as the analyst who could benifit from the database.

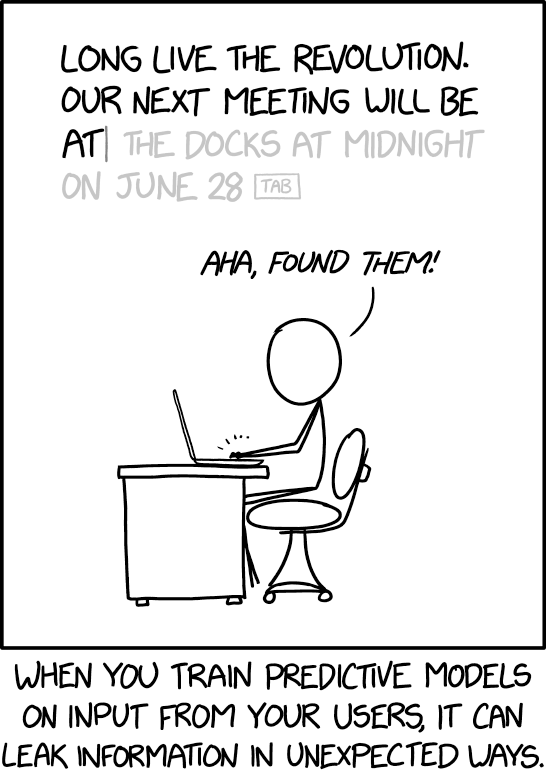

While , DP can seem very pessimistic or unnecessary , the issues DP can quantify and guide to solve scale beyond just basic population statistics. Even training Neural Networks. What are the possible harms of neural networks ? Certain approaches could be used to reconstruct neural network training data. Think about it this way , you are using a NLP service of predicting next word by a MLAAS(Machine Learning As a Service) provider such as Amazon AWS or Microsoft Azure. You could possibly get it to predict a next word that was supposed to confidential or a facial recognition algorithm service where you could show it images of similar people to get an idea of target people to know if they belonged to the training data.

Further , differential privacy does not aim to guarantee protection of most individuals data but designed to guarantee every individual's data. The few people with interesting data do form the most interesting insights in analysis and needs to be protected.

This introduces another term used to charecteristic a database called sensitivity. This can be givenWhat it intuitively means is that how likely the value of outcome would change if given person was not in the database. Similar to epsilon definition privacy but here we are taking in terms absolute values and not probabilities.Sensitivity is a term that is dependent on the database.

A major guarantee of DP is that it protects individuals data from auxillary information. That is if the data from a given database is combined with data from other database , the data of the individuals still remains private.

Another major property of DP is Composition. Composition not only has a way to quantify how privacy degrades over a single mechanism or query but a series of mechanism or queries. Simplest rule of them is basic composition , which states that if k DP algorithms are run the privacy is now

DP(k,k

).

I thank my friend Shravan Sridhar for reviewing and helping me improve this article

Hello there, thanks for visiting this page. My blog has moved to hrishikamath.com. Won't put effort into even making this page look nice for you :P.